❝ Life can only be understood backwards; but it must be lived forwards. ❞

Project the Past into the Future

Every business must plan for the future. Many management decisions depend upon estimating the value of one or more variables at some future time. What are the monthly sales projections for the next three months? How many employees will our company have at this time next year? What is the estimated interest rate two months from now? How large will the inventory be during the summer months? Some of these questions, such as the estimated interest rate, involve forecasting a single value. Inventory, however, might consist of thousands of different items, each requiring a specific forecast. Planning for the future in such a company involves thousands of forecasts.

To forecast the future values of a variable, we need to understand the past. We need to understand the pattern by which the values of the variable are generated over time. The following material describes a core set of patterns with their visualizations that distinguish between the pattern and its realization as data.

Processes

The process is the core unit for organizing business activities and the basis for forecasting.

Structured set of procedures that generate output over time to accomplish a specific business goal.

A functioning business is a set of interrelated business processes that ultimately lead to the delivery and servicing of the product or service. Managing a business is managing its processes, so evaluating on-going performance of the constituent business processes is a central task for managers. To assess process performance, consider variables that generate values over time such as:

Consider some examples of outcome variables for business processes.

- Supply Chain: Ship Time of raw materials following the submission of each purchase order

- Inventory: Daily inventory of a product

- Manufacturing: Length of a critical dimension of each machined part

- Marketing: Ongoing satisfaction measured with customer ratings

- Production: Amount of cereal by weight in each cereal box

- Order Fulfillment: Pick time, elapsed time from order placement until the order is boxed and ready for shipment

- Accounting: Time required to forward a completed invoice from the time the order is placed

- Sales: Satisfaction rating of customers after purchasing a new product

- Health Care: Elapsed time from an abnormal mammogram until the biopsy

The values for these variables vary over time. How can we predict the future values of these variables?

One way to predict a variable’s future value is to discover its pattern of variation over past time periods and extend that pattern into the future.

Ongoing business processes generate a stream of data over time. Understanding how the process performed in the past is the key to forecasting its future performance. If sales have been steadily increasing every month for the last two years, and this trend is expected to continue, then a forecast of future sales can project this trend into the next three months. Successful forecasting is the successful search for patterns from past performance.

Randomness vs Structure

Unfortunately, uncovering the underlying structure of previous time values is not always straightforward.

The analysis of the pattern of variation of a variable’s values over time must distinguish the underlining signal, the true pattern, from the random noise, the random sampling variation, that surrounds the pattern.

Noise obscures the true pattern, but it is structure and pattern that can be projected into the future. The construction of models by searching for pattern buried among noise and instability is not only central to forecasting, it is crucial to statistical thinking in general.

To illustrate, consider a process as simple as coin flipping. Flip a coin 10 times. How many Heads will you get? We predict five, but random variation ensures that the obtained value can vary anywhere from 0 to 10, with five just the most likely value. In actuality, flipping a fair coin 10 times will result in five heads less than 1/4 of each 10 flips. Usually, over 75% of each 10 coin flips, a value other than five Heads will be obtained.

The values of a variable at least partially vary according to random influences from one value to the next. Each data value is determined by an underlying stable component consistent with the underlying pattern, such as the fairness of a coin, and a random component that consists of many undetermined causes.

A data value results from the influence of the underlying pattern plus the error term, the sum of many undetermined influences.

Random variability is pervasive, and its impact on data analysis is profound. Even if the structure of the process is disentangled from the noise, the noise is always present. The exact next value cannot be known until that outcome occurs.

For example, moving beyond coin flips, the hospital staff does not know when the next patient will arrive in the emergency room until the patient arrives. Nor do they know how many patients will be admitted on any one evening. A hospital may see 17 people admitted to the emergency room on one Saturday evening, and 21 people another Saturday evening. You do not know the amount of overtime hours in your department that will occur next month until next month happens. And you only know how much the next tank of fuel will cost once you again fill up the tank.

The opposite of randomness is pattern, stability and structure, the basic tendencies that underlie the observed random variation. The same hospital that admitted 18 and then 21 patients to the emergency room on a Saturday evening, admitted on the corresponding Wednesday evenings, 8 and 6 people. All four admittance numbers – 17, 21, 8, 6 – are different, but the pattern is that more people were admitted on a Saturday evening than on a Wednesday evening. Any capable forecasting algorithm would leverage this knowledge of the differential pattern of arrivals.

The Future

A central task of data analytics is to reveal and then quantify the underlying tendencies and pattern. Sometimes the task of uncovering and quantifying structure is straightforward, and other times it involves is as much intuition and skill including the formal application of sophisticated analytic forecasting procedures. To delineate this stable pattern from the observed randomness, construct a set of relationships expressed as a model.

Mathematical expression that describes an underlying pattern apart from the random variation exhibited by the data.

The outcomes of a process include a random component, but the model describes the underlying, stable pattern. The data consist of this stability with the added randomness that to some extent obscures the pattern. Projecting this pattern into the future includes the following steps.

The ability to accurately forecast is necessary to business success. Management decisions apply to the future. Try running a business in which the forecasted sales never materialize.

- Describe: Visually assess the inherent variation in the data

- Infer: Build a model that expresses the knowledge of the underlying stable component that underlies this variation

- Forecast: From the model project this stable component into the future as the estimate of future reality

- Evaluate: Wait for some time to pass and then compare the forecast to what actually happened

The knowledge obtained from this analysis begins with a description of what is, an inference of the underlying structure that culminates in a forecasting model, followed by a forecast of what will likely be, and then refinement of the model to improve the accuracy of the forecasts. The primary problem of identifying patterns from the past is the presence of sampling error.

Visualize Patterns Over Time

The data and visualization of the values of a variable over time result from an on-going process. Visualize the data values of a variable to reveal the pattern of their variability over time in one of two fundamental ways: run chart and time series visualizations, discussed next.

Run Chart

Consider the time dimension of an ongoing process. Effective management decisions about when to change the process, to understand its performance, to know when to leave it alone, to evaluate the effectiveness of a deliberate change, require knowledge of how the system behaves over time. Evaluation of a process first requires accurate measurements of one or more relevant outcome variables over time.

Plot of the values of a variable identified by their order of occurrence, with line segments connecting individual points.

Use the run chart to plot the performance of the process over time if the dates or times at which each point was recorded are not available or not necessary. However, order the data values sequentially according to the date or time they were acquired. The run chart lists the sequential position of each data value in the overall sequence and the horizontal axis.

The ordinal position of each value in the overall sequence of data values, numbered from 1 to the last data value.

A run chart is a specific type of line chart. Display the values collected over time on the vertical axis. On the horizontal axis, display the Index. A run chart may also contain a center line, such as the median of the data values to help compare the larger values to smaller values.

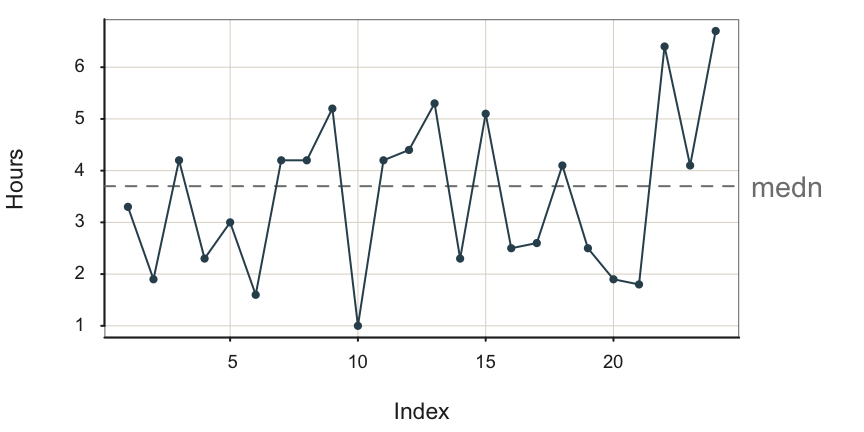

As an example of a run chart, consider pick time, the elapsed time from order placement until the order is packaged and ready for shipment. Pick time is central to the more general order fulfillment process, and requires management oversight to minimize times and to detect any bottlenecks should they occur. The variable is Hours, the average number of business hours required for pick time, assessed weekly, illustrated in Figure 1. The data are available on the web as a text data file at the following location.

https://web.pdx.edu/~gerbing/data/pick.csv

First, read the data, which contains the variable Hours, into the d data frame.

d <- Read("https://web.pdx.edu/~gerbing/data/pick.csv")

Obtain the run chart and associated statistics with the lessR function Plot() to display the values in sequence.

Plot(Hours, run=TRUE)

run: Parameter to inform the function that the values should be plotted sequentially on the y-axis, in the order in which they occur in the data table, with the Index plotted on the x-axis. Set the run parameter to TRUE.

Plot() automatically connects adjacent points with a line segment. The center line, the median, is automatically added if the values of the variable tend to oscillate about the center.

What does the manager seek to understand from a run chart? A primary task of process management is to assess process performance in the context of this random variation, to know:

- The central level of performance of the process, mean or median

- The amount of random variation about the central level inherent in the process

The next task is to actively manage process performance. We see from Figure Figure 1 a concerning trend for pick time deteriorating toward the end of data collection. The last three data values are above the median, and the maximum value of 6.70 is obtained as the last data value. Are these larger pick time values random variation, or do they signal a true deterioration in the process? More data would answer that question, data carefully scrutinized by management. Adjust the central level of performance up or down to the target level, as needed. Continue to minimize the random variation about the desired average level of performance.

Process Stability

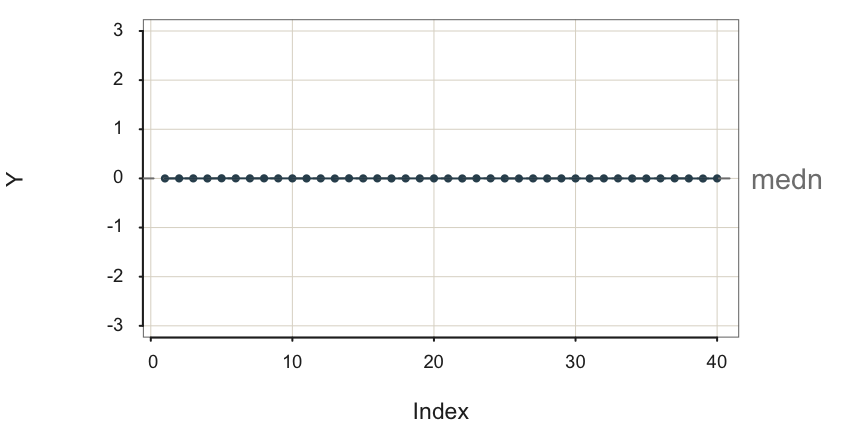

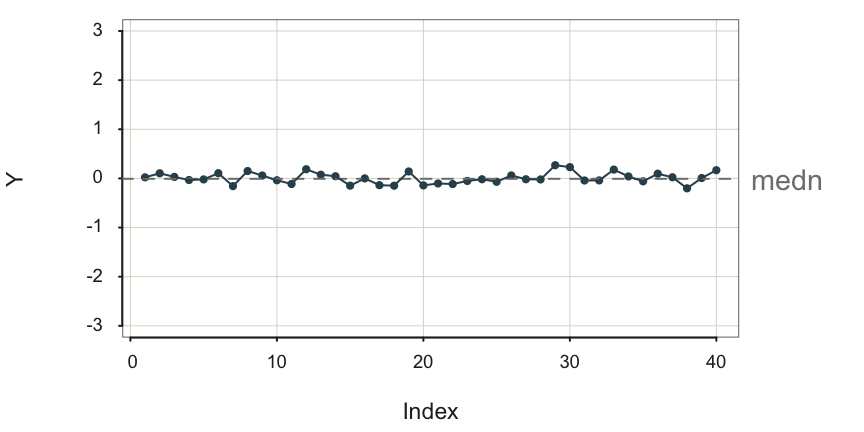

Processes always exhibit variation but that variation can result from underlying stable population values of the mean and standard deviation.

Data values generated by the process result from the same overall level of random variation about the same mean.

The run chart of a stable process displays random variation about the mean at a constant level of variability.

A stable process or constant-cause system produces variable output but with an underlying stability in the presence of random variation. The output changes, but the process itself is constant. W. Edwards Deming describes a stable process as follows.

W. Edwards Deming established that a process must first be evaluated for stability, which is required to verify the quality control of the process output. There is an entire literature dedicated to this proposition. Deming became revered worldwide for his contributions to quality control, especially in Japan as it rebuilt its industrial capabilities following World War II.

There is no such thing as constancy in real life. There is, however, such a thing as a constant-cause system. The results produced by a constant-cause system vary, and in fact may vary over a wide band or a narrow band. They vary, but they exhibit an important feature called stability. … [T]he same percentage of varying results continues to fall between any given pair of limits hour and hour, day after day, so long as the constant-cause system continues to operate. It is the distribution of results that is constant or stable. When a … process behaves like a constant-cause system … it is said to be in statistical control.

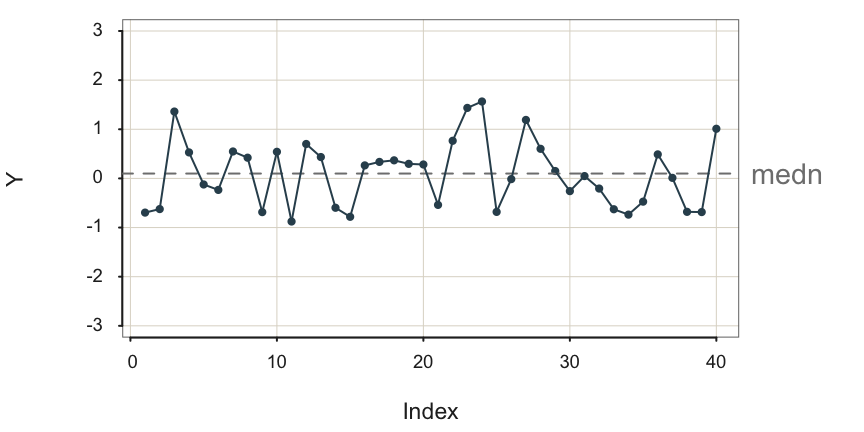

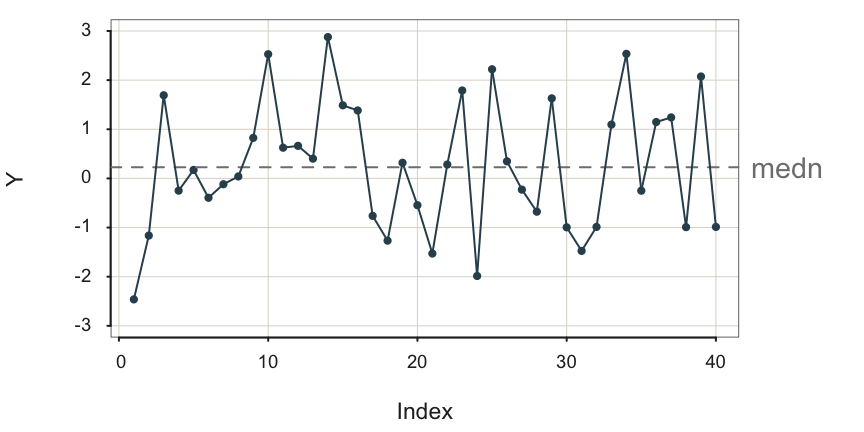

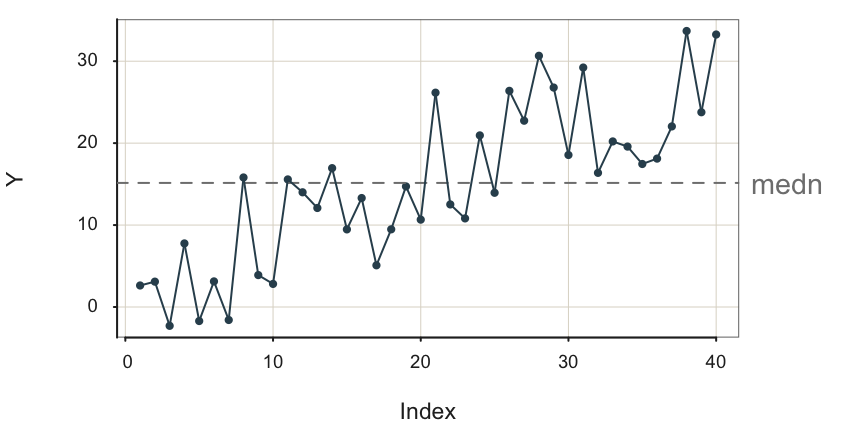

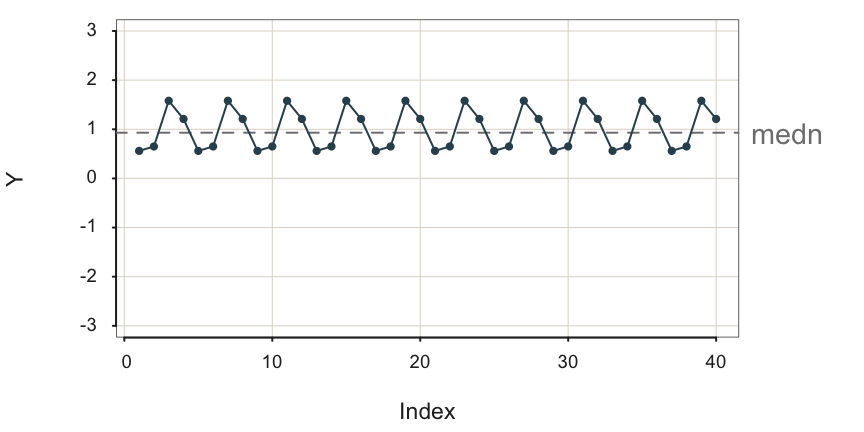

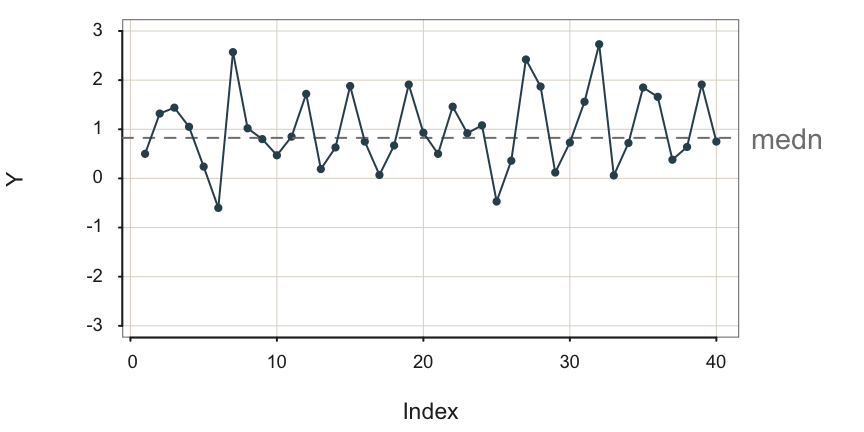

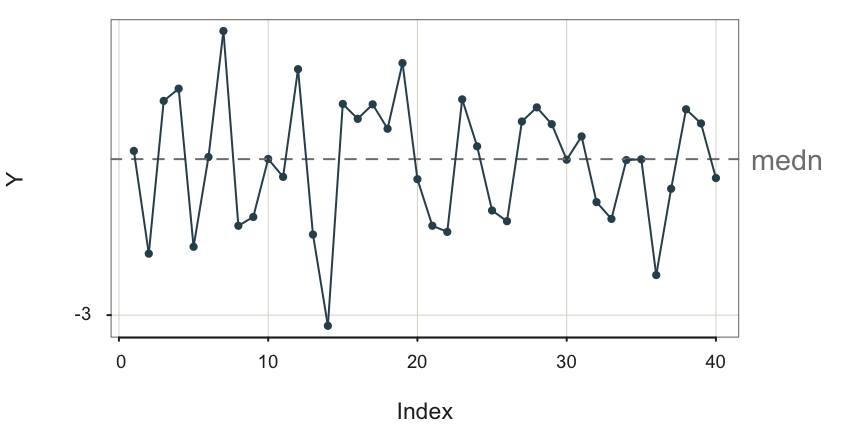

To illustrate this random, sampling error inherent in a sequence values generated over time, consider the following four stable processes shown in Figure 2, Figure 3, Figure 4, and Figure 5. To better compare the processes, their visualizations all share the same y-axis scale, from -3 to 3. In the following figure captions, \(m\) is the sample mean and \(s\) is the sample standard deviation. For illustrative purposes, each run chart of each of the four stable processes is illustrated with the median as the center line.

What differs across these four stable processes is their variability. All four processes are stable, but the variability of their output differs. The sample standard deviation of these four stable processes varies from 0.0010 to 1.3364.

The definition of a stable process is not a small variability of output but rather a constant level of variability about the same mean..

To create a forecast you first need to understand the structure of the underlying process. First, identify the pattern to be projected into the future. If you view Figure 5 with the large amount of random error, realize that the process is stable even if the outcomes are highly variable. Specifically, recognize that the fluctuations are not the regular fluctuations of seasonality but instead are irregular with no apparent pattern. With no seasonality and the same mean underlying all the data values, the forecast for the next value remains the mean of the previous values.1

1 Assumptions of a stable process are better evaluated from an enhanced version of a run chart called a control chart, essential for the analysis of quality control.

Non-Stable Processes

Some other patterns found in the data values for a variable collected over time are described next.

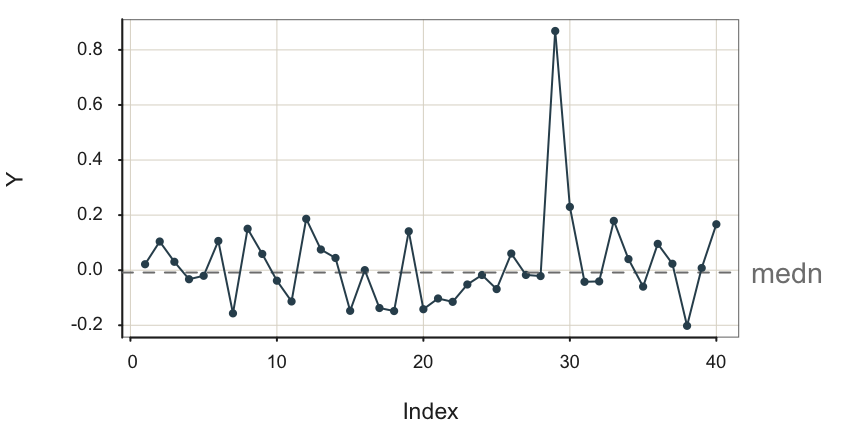

Outlier

Of particular interest in the analysis of any set data values, including the outcomes of a process, is an outlier.

A data value considerably different from all or most of the remaining data values in the sample.

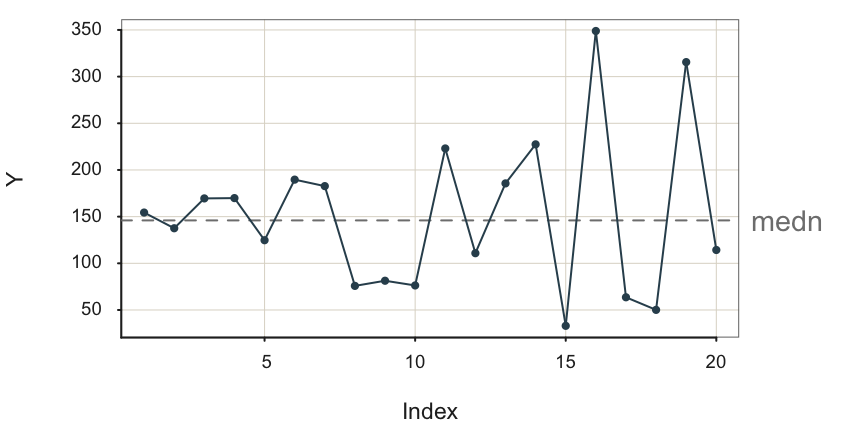

An outlier indicates the presence of a special cause in Deming’s terminology, a temporary event, which resulted in a deviant data value. Figure 6 contains an outlier.

Given a process otherwise in control but with an outlier, the best forecast is not the average of all of the values. Suppose it is established that an outlier occurred due to a data value sampled from a process distinct from that which generated the remaining date values. Then, there is no meaning in analyzing all the data values as a single sample. Figure 6 likely shows the results from two separate processes.

Understand why the outlier occurred and ensure the conditions that generated the outlier do not occur for the forecasted values.

When observing an outlier, understand how and why the outlier occurred. This is almost always an essential understanding because it often leads to a change in the procedure, presumably for the better. The most trivial cause is a simple data entry error, not the type of data to base management decisions. Or, more fundamentally, is a particular shipment of metal in the manufacturing process defective? Or is the output from a specific machine defective?

Process Shift

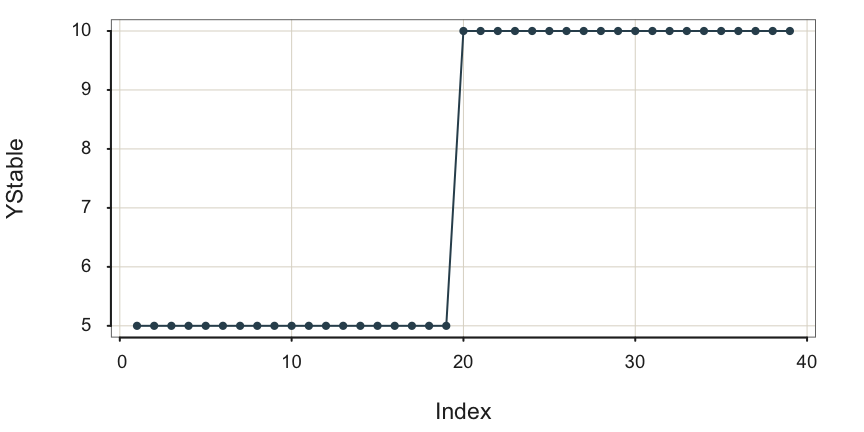

Level Shift

Another pattern is a process in which some event occurs that shifts the level of the process up or down, essentially transforming one process into another. Figure 7 illustrates a stable process without error, the underlying structure free from random error, not the data, which then shifts to a different level.

The following figure illustrates this process as observed in reality. Random error partially obscures the underlying stability followed by the upward shift of the process mean to define a new, stable process, shown in Figure 8.

Once a process has changed, such as a level shift, the data values that occurred before the change are no longer relevant for discerning the current underlying signal from which to generate a forecast.

Hopefully, there is enough data to discern the underlying structure after the level shift. Recognize that after the level shift there is a new process, but, of course, that new process may be desirable. The process output may be profitability or, applied to an industrial process, volume of output.

Variability Shift

The amount of variability inherent in the system can also change over time. Consider an industrial assembly in which the set up that manufacturer is a part is becoming more loose overtime, increasing the variability of the dimensions of the output. Figure 9 illustrates this pattern.

Each data point in Figure 9 is sampled from a different process. Each successive process generates output more variable than the previous process.

Trend

Another type of non-stability is trend, again, a desirable outcome if it is positive and the process describes profitability.

The long-term direction of movement of the data values over time, a general tendency to increase or decrease.

The example in Figure 10 is a positive, linear trend with considerable random error obscuring the underlying signal.

Without random error, a linear trend plots as a straight line, either with + slope or - slope.

Extend the trend “line” into the future.

The trend line can be an actual straight line, linear, or it can be curvilinear, such as exponential or logarithmic growth or exponential decay.

Seasonality

Another typical, non-stable pattern in data over time is seasonality.

Pattern of fluctuations that repeats at regular intervals with the same intensity, such as the four quarters of a year.

This first plot, in Figure 11, is of the underlying structure, the signal without the contamination of random error.

In the plot in Figure 12, some random error is added to the additive seasonality.

The process illustrated in Figure 13 exhibits much random error that tends to obscure the underlying signal, the additive seasonality.

The forecast for a data value that reflects seasonality with no pattern of increase or decrease, such as in Figure 13, depends only on the season and the impact of a particular season on the deviation from the overall mean of the process.

Estimate and apply the seasonal effect for the season at which the forecasted data value occurs.

As always, the more random error the more difficult to estimate the seasonal effects. How these seasonal effects are estimated is discussed later. For God sake

Trend with Multiplicative Seasonality

Consider a successful swimwear company that generally experiences more robust sales each year but suffers from relatively lower fall, especially winter sales. The highest quarter for sales is spring (probably most in late spring) as customers prepare for summer, closely followed by Summer sales. Sales continue to grow every year but generally comparatively less so for fall and winter.

The intensity of the regular seasonal swings up and down, which systematically increase or decrease in size forward across time.

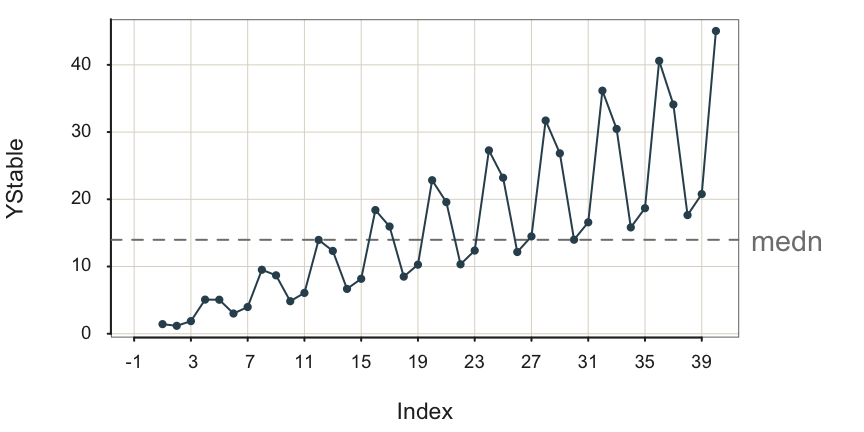

Following is an example of the underlying structure of quarterly geometrical seasonality with an overall positive, linear trend. Because there is no random error for the sales variable YStable, the plot in Figure 14 is of the structure, not actual data, which is always confounded with random sampling error. To facilitate observing the pattern of seasonality, the first of the quarterly seasons, Winter, is displayed on a vertical grid line in Figure 14.

For example, consider Time 31, a Winter quarter. The sales are larger than for any season for the early years, sales are low within the context of that given year. Sales then increase much at Time 32 for Spring, remain high but diminish slightly for Time 33, Summer, and then diminish again for Time 34, the fourth quarter, Fall. Winter sales then rise slightly compared to the Fall, perhaps to take advantage of sale prices or planning for next summer.

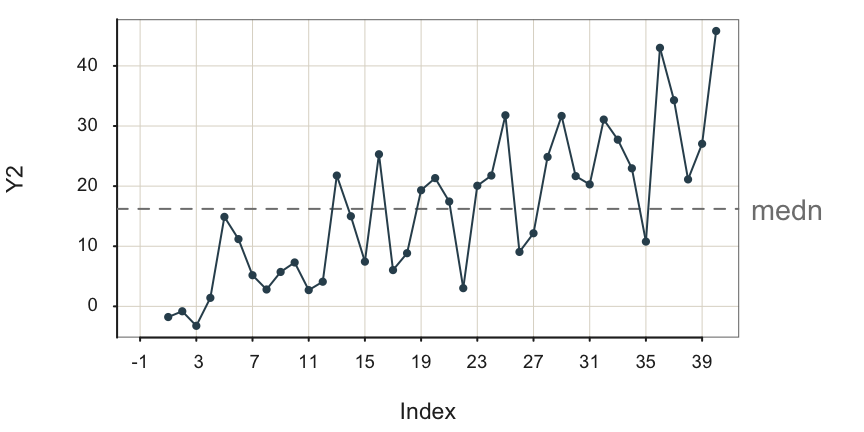

Given data, structure plus sampling error, the underlying, stable pattern is obscured to some extent but remains apparent.

With even more random error shown in Figure 16, it is easy to miss the seasonal sales signal and falsely conclude that the up and down movements of the data over time is due only to random error, though the trend remains obvious.

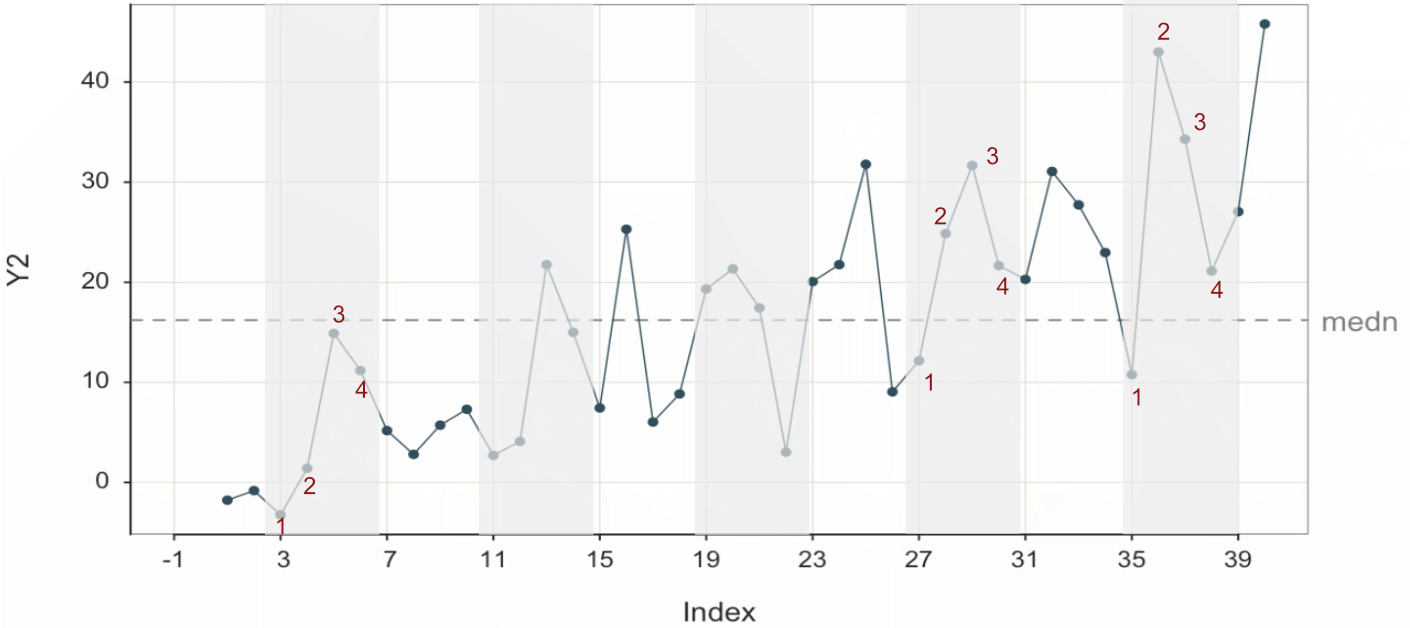

To help delineate the stable pattern, the signal, from the noise, Figure 17 highlights the groups of four seasonal data values from Figure 16. It explicitly numbers their seasonality for three groups.

Project the trend “line” into the future and then add the seasonal effect for each corresponding forecasted value.

The process of forecasting is to discern the signal from the noise and then forecast from the signal. This is not always straightforward, but it is always more accessible with more data. Fortunately, analytic methods exist to disentangle the trend and seasonal components from each other and from random errors.

Here, the purpose is to visualize some of the various patterns in time-oriented data and to understand better how the random error always present in data obscures the underlying pattern. The goal is to not only rely upon analytic forecasting software but also to develop some visual intuition from examining these visualizations. The more the analyst can discern structure from a visualization, such as trend with geometric seasonality, often leads to more effective use and understanding of the results provided by the analytic software.

Visualize Patterns as Time Series

A time series orders the values of a variable by time, just as a run chart does. However, the time series also provides the corresponding dates or times, usually plotted on the x-axis.

Plotted sequence of data values against the corresponding dates and/or times at which the values were recorded, usually at regular intervals.

The time series is one of the basic concepts for forecasting. Examples include ship times and inventory levels, any process that generates values over time. When forecasting a future value from past values of a variable, discover the underlying structure from the past, disentangled from the random variation. Then, extrapolate the past structure into the future.

Ultimately, we usually wish to use analytical forecasting methods to obtain a statistical forecast. However, the analysis begins with a visualization of the time series. Before extracting structure analytically, view the patterns present over time. The more you understand the structure of the time series before proceeding with analytical forecasting methods, the better you can direct the forecasting methods to obtain the most accurate forecast.

Example Data

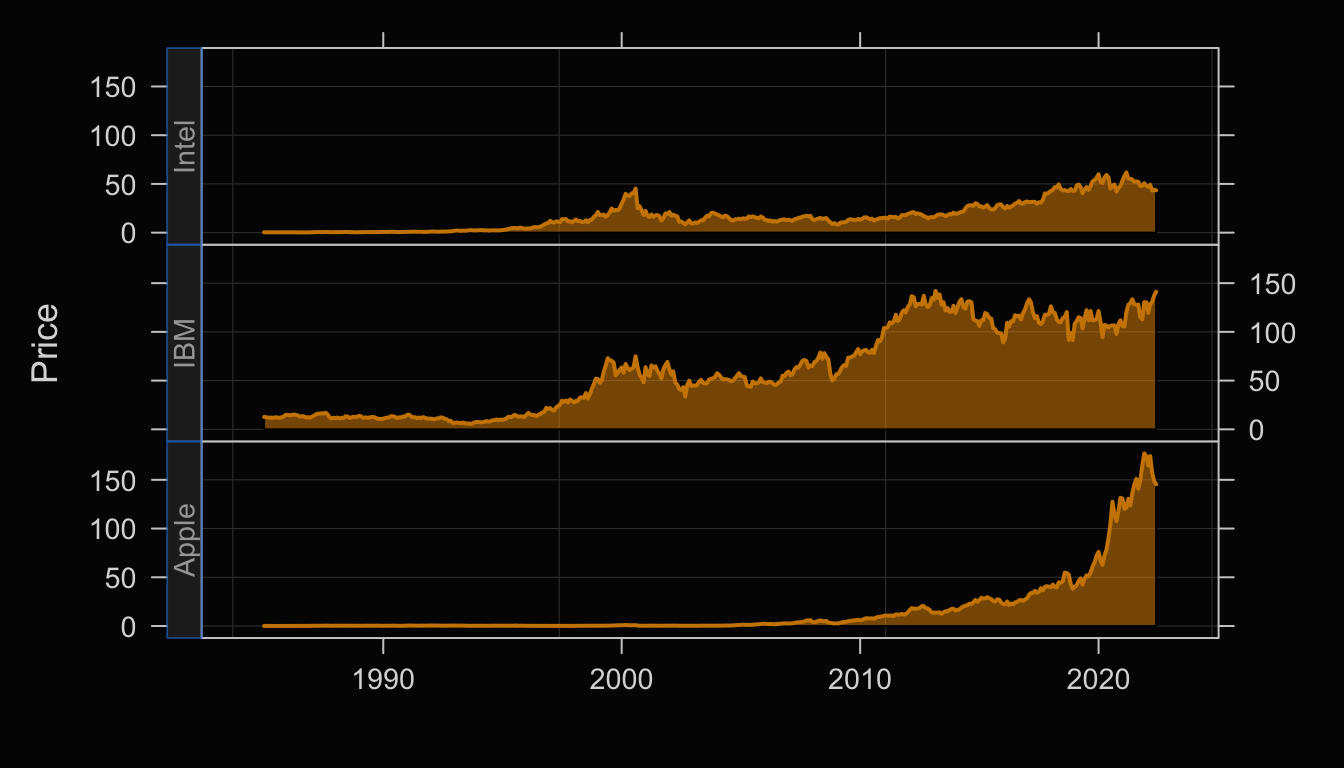

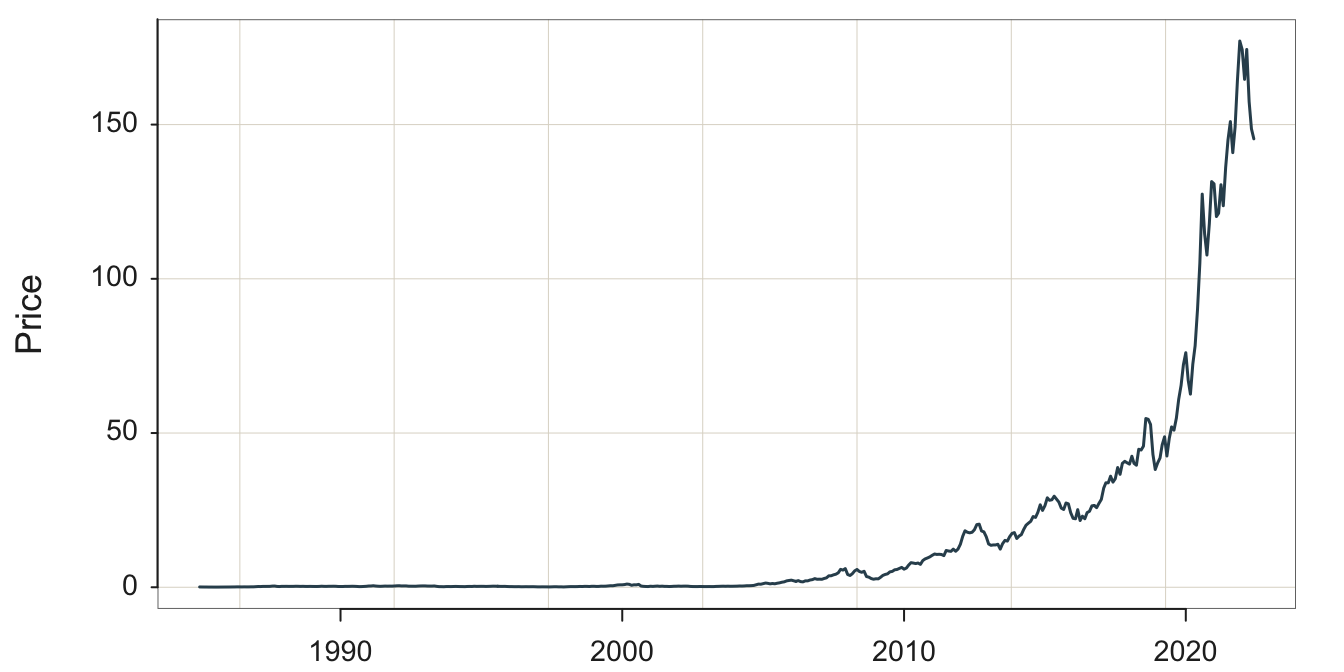

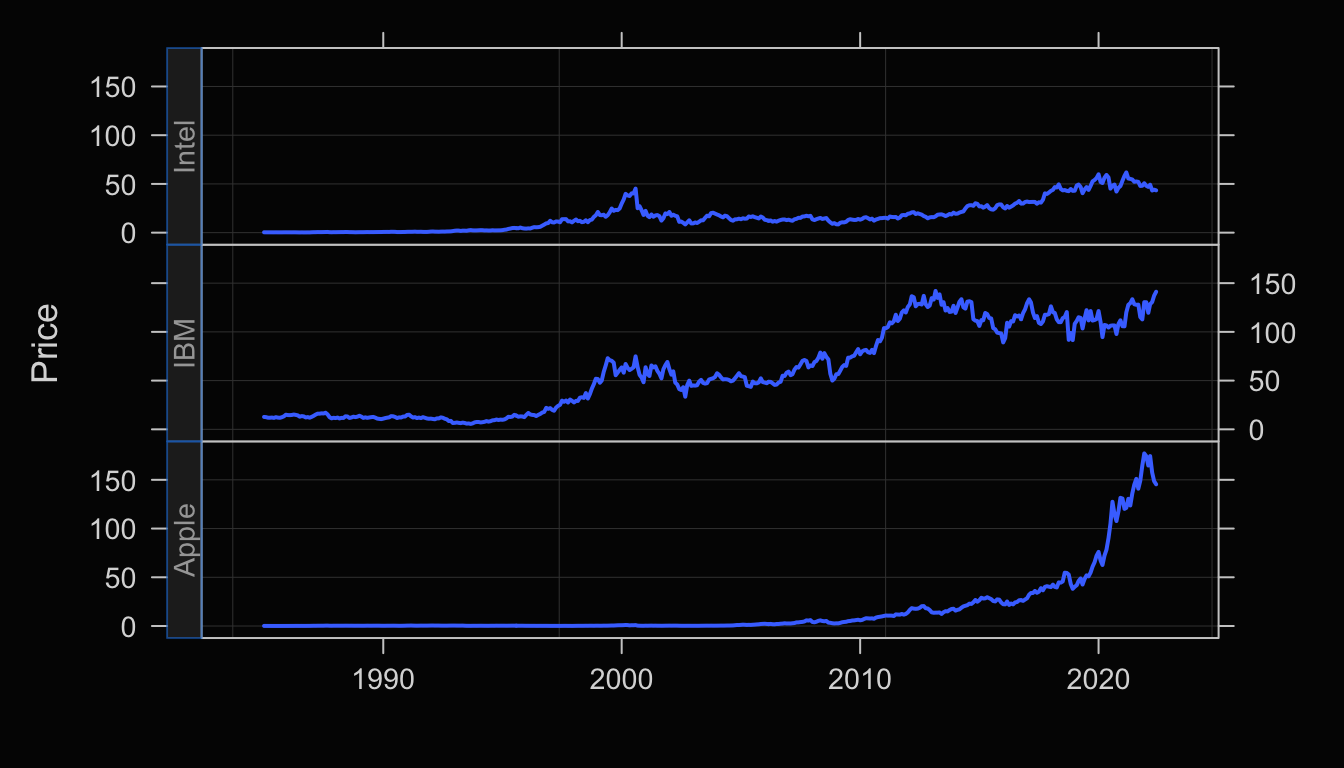

The data for the following examples is the stock price of Apple, IBM, and Intel from 1985 through mid-2022, obtained from finance.yahoo.com. The data were obtained from three separate downloads and then concatenated into a single file. The data are available on the web as an Excel file at the following location.

https://web.pdx.edu/~gerbing/data/StockPrice.xlsx

Make sure to enclose the file reference in quotes within the call to the lessR function Read().

d <- Read("https://web.pdx.edu/~gerbing/data/StockPrice.xlsx")Data Types

------------------------------------------------------------

character: Non-numeric data values

Date: Date with year, month and day

double: Numeric data values with decimal digits

------------------------------------------------------------

Variable Missing Unique

Name Type Values Values Values First and last values

------------------------------------------------------------------------------------------

1 Month Date 1350 0 450 1985-01-01 ... 2022-06-01

2 Company character 1350 0 3 Apple Apple ... Intel Intel

3 Price double 1350 0 1331 0.10105 0.086241 ... 44.071625 43.389999

------------------------------------------------------------------------------------------The output of Read() provides useful information regarding the data file read into the R date frame, here d. Always review this information to make sure that the data was read and formatted correctly. The variable type of the date/time variable Month was properly read as Date.

If the data file were stored as a text file, R would not automatically translate the character string of dates into a variable of type Date. In a text file, all data values are character strings. However, R translates character strings that are numbers into a numerical variable type when reading the data into an R data frame. Not so with dates, which remain as character strings. The variable with these date fields must be explicitly converted to variable of type Date with the as.Date() function. Storing the data as an Excel file avoids this extra step because Excel has already done the conversion.

There are three variables in the data table: Month, Company, and Price. To plot a time series, there must be a variable that contains the dates. If the date variable is present in the data file, it must be formatted as a date/time variable.

In addition to variable types for text strings and numbers, data analysis systems typically provide a variable type specifically for dates and times.

One advantage of storing the data table as an Excel file is that Excel does an excellent job recognizing and classifying data values as date values instead of character strings when appropriate. Because the data file is an Excel file in this example, this formatting has already been done. This formatting then typically transfers over to the analysis system that will create the visualization.

The file contains 1350 rows of data, with 450 unique dates reported monthly. The dates are repeated for each of the three companies. There is no missing data. The dates are stored according to the ISO 8601 international standard, which defines a four-digit year, a hyphen, a two-digit month, a hyphen, and then a two-digit day.

ISO is the acronym for the organization that sets global standards for goods and services: the International Standards Organization, www.iso.org.

Following are some sample rows of data. The first column of numbers are not data values but rather row names.

- The first four rows of data, which are the first four rows of Apple data.

- The first four rows of IBM data, beginning on Row 451.

- The first four rows of Intel data, beginning on Row 901.

Month Company Price

1 1985-01-01 Apple 0.101050

2 1985-02-01 Apple 0.086241

3 1985-03-01 Apple 0.077094

4 1985-04-01 Apple 0.074045 Month Company Price

451 1985-01-01 IBM 12.71885

452 1985-02-01 IBM 12.49734

453 1985-03-01 IBM 11.94072

454 1985-04-01 IBM 11.89371 Month Company Price

901 1985-01-01 Intel 0.379217

902 1985-02-01 Intel 0.345303

903 1985-03-01 Intel 0.342220

904 1985-04-01 Intel 0.339137With the data, we can proceed to the visualizations.

One Time Series

We can plot the time series for any one of the three companies in the data table. Because the data file contains stock prices for three different companies, we need to subset the data with the process known as filtering.

Extract a subset of the entire data table for the specified analysis.

Every analysis system provides a way for filtering the data. Set up the time series visualization by plotting share Price vs. Month, filtering the data so that only the stock price for Apple is visualized.

Plot with the lessR Plot() function of the form Plot(x,y). When the x-variable is a date, here named Month, Plot() creates a time series visualization instead of the x-y scatterplot. The y-variable in this example is Price.

Plot(Month, Price, rows=(Company=="Apple"))

rows: Parameter to specify the logical condition for selecting rows of data for the analysis. Note that in versions of lessR prior to 4.3.3, this parameter was named rows.

To visualize the data for only one company, we need to select just the rows of data for that company. Select specified rows from the data table for analysis according to a logical condition.

- The R double equal sign,

==means is equal to. - The == does not set to equality, it evaluates equality, resulting in a value that is either

TRUEorFALSE. - The expression (Company==“Apple”) evaluates to

TRUEonly for those rows of data for which the data value for the variable Company equals “Apple”.

size: Parameter to specify the size of the plotted points. By default, when plotting a time series, lessR, default size of the points is 0. Set at a positive number to visualize the plotted points, which are by default connected with line segments.

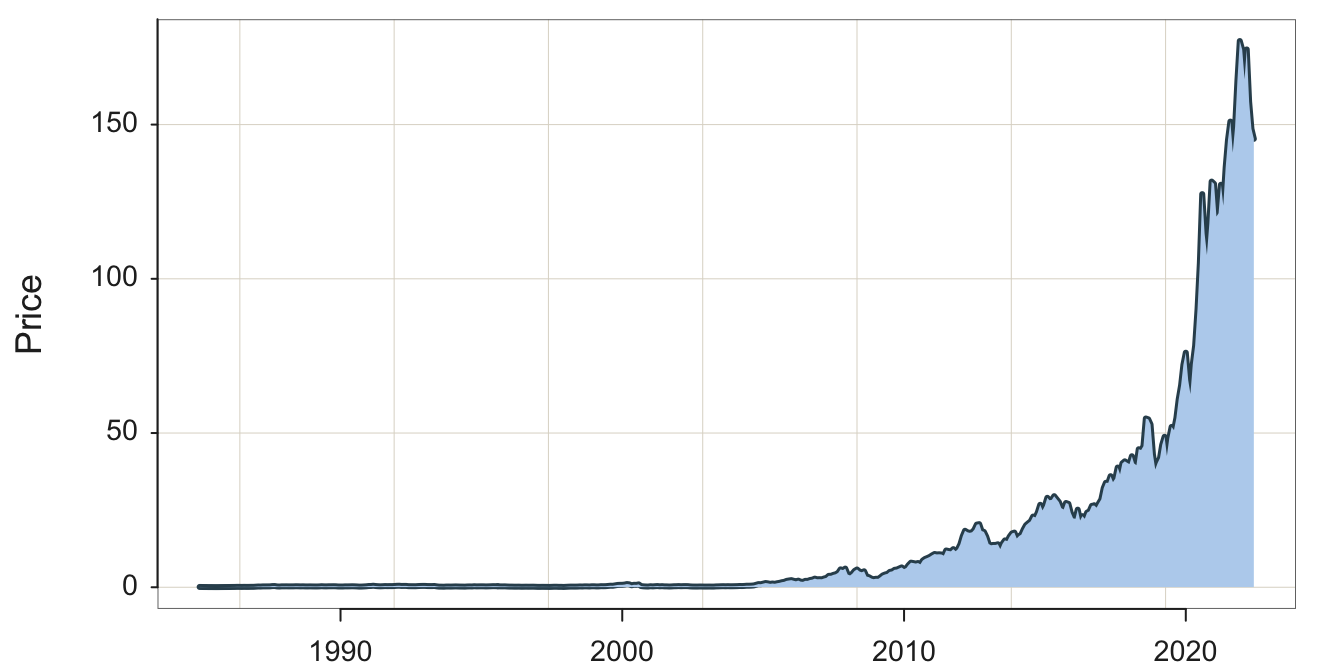

A desirable option that data visualization systems typically offer is the ability to fill the area under the curve to highlight the form of the plotted time series, a visualization often referred to as an area chart.

Plot(Month, Price, rows=(Company=="Apple"),

area_fill="slategray2", lwd=3)

area_fill: Parameter to indicate to fill the area under the curve. Set the value to on to obtain the default fill color for the given color theme, or specify a specific color such as with a color name.

lwd: Parameter to specify the line width of the time series curve. In the accompanying plot it was set to 3 instead of it’s default value of 1.5 to increase the thickness of the plotted line.

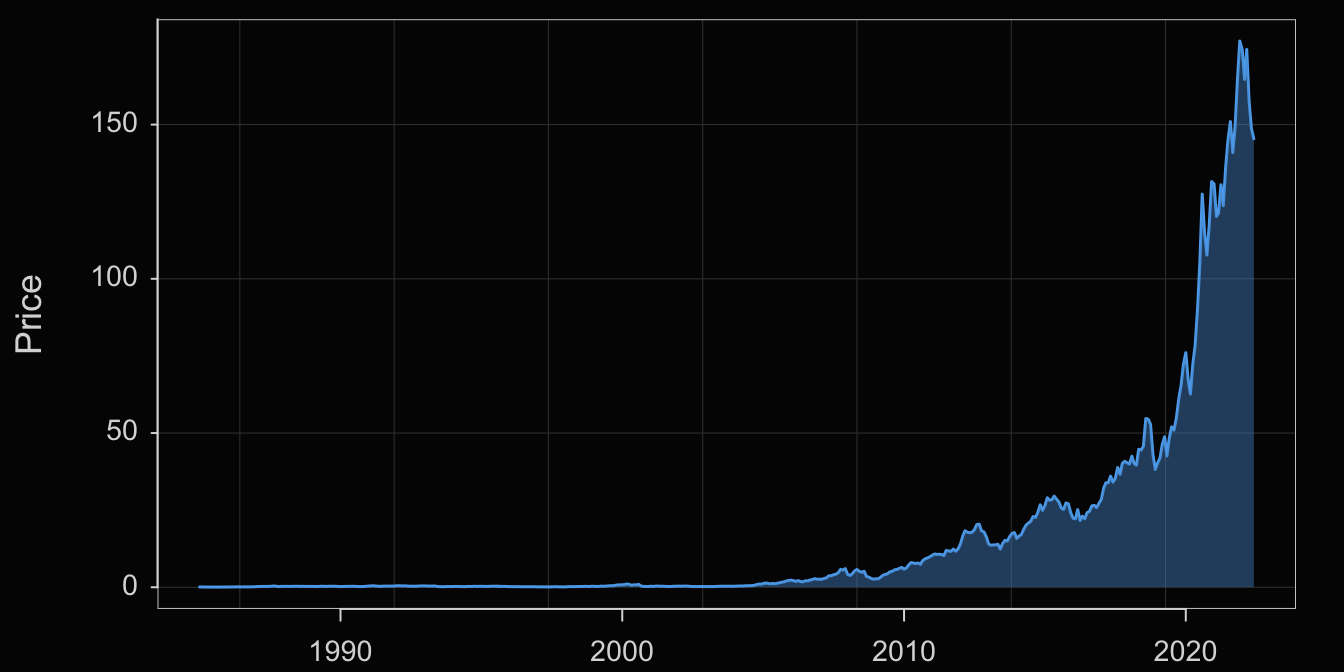

Visualization systems also offer many customization options such as for colors. We will not explore these customizations here in any detail, but offer the following example.

style(sub_theme="black")

Plot(Month, Price, rows=(Company=="Apple"),

color="steelblue2", area_fill="steelblue3", trans=.55)

style(): lessR function to set many style parameters. Here, set the background to black by setting the sub_theme parameter. Styles set with style() are persistent, that is, they remain set across the remaining visualizations until explicitly changed.

color: Parameter that sets the line color.

area_fill: Parameter that sets the color of the area under the curve.

transparency: Parameter to set the transparency level, which can be shortened to trans. The value is a proportion from 0, no transparency, to 1, complete transparency, that is, invisible.

Several Times Series

One Panel

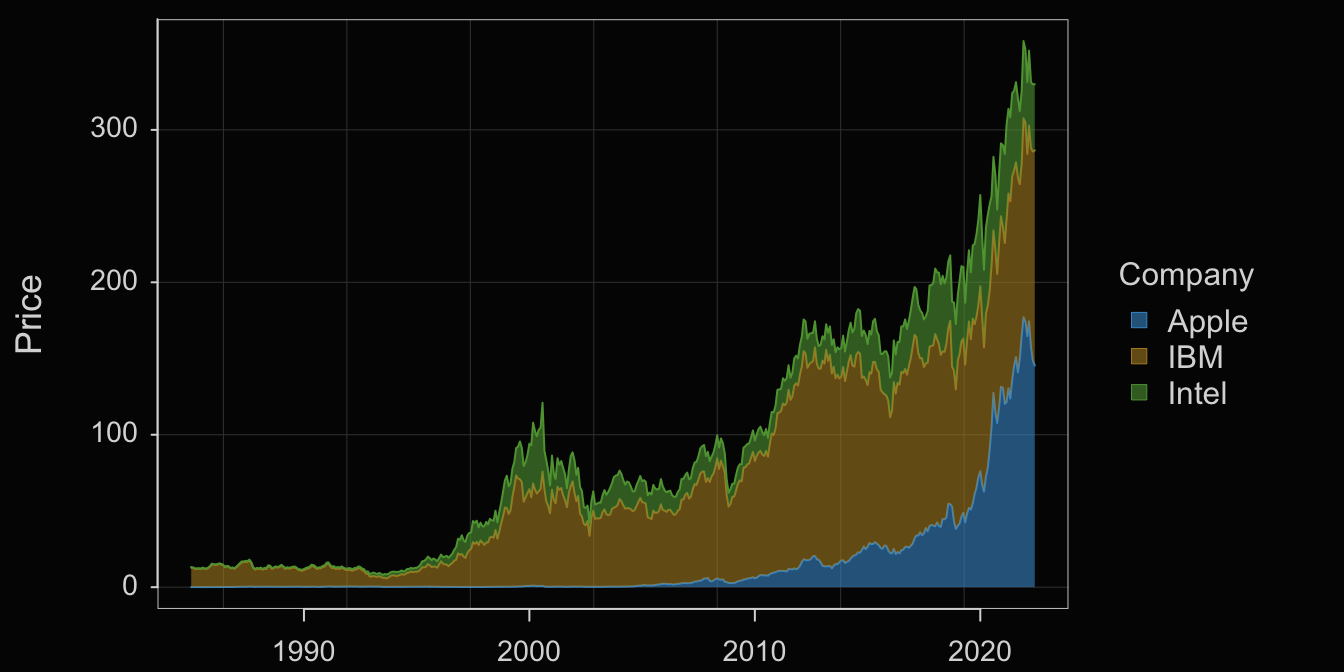

The variable Company in this data table is a categorical variable with three values: Apple, IBM, and Intel. Visualization systems typically offer options to stratify time series plots by a categorical variable such as Company. One option plots all three times series plots the same panel.

Plot(Month, Price, by=Company)

by: Parameter that specifies to plot a different visualization for each value of a specified categorical variable on the same panel.

Another option when plotting multiple times series on the same panel offered by some visualization systems is to stack each time series on top of each other, what is often called a stacked area chart.

Plot(Month, Price, by=Company, stack=TRUE, trans=0.4)

Set the parameter stack to TRUE to stack the plots on top of each other. When stacked, the Price variable on the y-axis is the sum of the corresponding Price values for each Company. The y-value for Apple at each date is its actual value because it is listed first (alphabetically by default). The y-value for IBM is the corresponding value for Apple plus IBM’s value. And, for Intel, listed last, each point on the y-axis is the sum of all three prices.

Different Panels

A Trellis plot, also called a facet plot, stratifies the visualization on the levels of a categorical variable by plotting each level separately on a different panel.

Plot(Month, Price, by1=Company)

by1: Parameter indicates to plot each time series on a separate panel according to the levels of the specified categorical variable.

Enhance the Trellis plot with the transparent orange fill against the black background, shown in Figure 19.

Plot(Month, Price, by1=Company,

color="orange3", area_fill="orange1", trans=.55)